by Valluru B. Rao

M&T Books, IDG Books Worldwide, Inc.

ISBN: 1558515526 Pub Date: 06/01/95

|

C++ Neural Networks and Fuzzy Logic

by Valluru B. Rao M&T Books, IDG Books Worldwide, Inc. ISBN: 1558515526 Pub Date: 06/01/95 |

| Previous | Table of Contents | Next |

The input and output layers are fixed by the number of inputs and outputs we are using. In our case, the output is a single number, the expected change in the S&P 500 index 10 weeks from now. The input layer size will be dictated by the number of inputs we have after preprocessing. You will see more on this soon. The middle layers can be either 1 or 2. It is best to choose the smallest number of neurons possible for a given problem to allow for generalization. If there are too many neurons, you will tend to get memorization of patterns. We will use one hidden layer. The size of the first hidden layer is generally recommended as between one-half to three times the size of the input layer. If a second hidden layer is present, you may have between three and ten times the number of output neurons. The best way to determine optimum size is by trial and error.

NOTE: You should try to make sure that there are enough training examples for your trainable weights. In other words, your architecture may be dictated by the number of input training examples, or facts, you have. In an ideal world, you would want to have about 10 or more facts for each weight. For a 10-10-1 architecture, there are (10X10 + 10X1 = 110 weights), so you should aim for about 1100 facts. The smaller the ratio of facts to weights, the more likely you will be undertraining your network, which will lead to very poor generalization capability.

We now begin the preprocessing effort. As mentioned before, this will likely be where you, the neural network designer, will spend most of your time.

Let’s look at the raw data for the problem we want to solve. There are a couple of ways we can start preprocessing the data to reduce the number of inputs and enhance the variability of the data:

We are left with the following indicators:

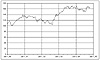

Raw data for the period from January 4, 1980 to October 28, 1983 is taken as the training period, for a total of 200 weeks of data. The following 50 weeks are kept on reserve for a test period to see if the predictions are valid outside of the training interval. The last date of this period is October 19, 1984. Let’s look at the raw data now. (You get data on the disk available with this book that covers the period from January, 1980 to December, 1992.) In Figures 14.3 through 14.5, you will see a number of these indicators plotted over the training plus test intervals:

Figure 14.3 The S&P 500 Index for the period of interest.

Figure 14.4 Long-term and short-term interest rates.

Figure 14.5 Breadth indicators on the NYSE: Advancing/Declining issues and New Highs/New Lows.

A sample of a few lines looks like the following data in Table 14.1. Note that the order of parameters is the same as listed above.

| Date | 3Mo TBills | 30YrTBonds | NYSE-Adv/Dec | NYSE-NewH/NewL | SP-Close |

|---|---|---|---|---|---|

| 1/4/80 | 12.11 | 9.64 | 4.209459 | 2.764706 | 106.52 |

| 1/11/80 | 11.94 | 9.73 | 1.649573 | 21.28571 | 109.92 |

| 1/18/80 | 11.9 | 9.8 | 0.881335 | 4.210526 | 111.07 |

| 1/25/80 | 12.19 | 9.93 | 0.793269 | 3.606061 | 113.61 |

| 2/1/80 | 12.04 | 10.2 | 1.16293 | 2.088235 | 115.12 |

| 2/8/80 | 12.09 | 10.48 | 1.338415 | 2.936508 | 117.95 |

| 2/15/80 | 12.31 | 10.96 | 0.338053 | 0.134615 | 115.41 |

| 2/22/80 | 13.16 | 11.25 | 0.32381 | 0.109091 | 115.04 |

| 2/29/80 | 13.7 | 12.14 | 1.676895 | 0.179245 | 113.66 |

| 3/7/80 | 15.14 | 12.1 | 0.282591 | 0 | 106.9 |

| 3/14/80 | 15.38 | 12.01 | 0.690286 | 0.011628 | 105.43 |

| 3/21/80 | 15.05 | 11.73 | 0.486267 | 0.027933 | 102.31 |

| 3/28/80 | 16.53 | 11.67 | 5.247191 | 0.011628 | 100.68 |

| 4/3/80 | 15.04 | 12.06 | 0.983562 | 0.117647 | 102.15 |

| 4/11/80 | 14.42 | 11.81 | 1.565854 | 0.310345 | 103.79 |

| 4/18/80 | 13.82 | 11.23 | 1.113287 | 0.146341 | 100.55 |

| 4/25/80 | 12.73 | 10.59 | 0.849807 | 0.473684 | 105.16 |

| 5/2/80 | 10.79 | 10.42 | 1.147465 | 1.857143 | 105.58 |

| 5/9/80 | 9.73 | 10.15 | 0.513052 | 0.473684 | 104.72 |

| 5/16/80 | 8.6 | 9.7 | 1.342444 | 6.75 | 107.35 |

| 5/23/80 | 8.95 | 9.87 | 3.110825 | 26 | 110.62 |

For each of the five inputs, we want use a function to highlight rate of change types of features. We will use the following function (as originally proposed by Jurik) for this purpose.

ROC(n) = (input(t) - BA(t - n)) / (input(t)+ BA(t - n))

where: input(t) is the input’s current value and BA(t - n) is a five unit block average of adjacent values centered around the value n periods ago.

Now we need to decide how many of these features we need. Since we are making a prediction 10 weeks into the future, we will take data as far back as 10 weeks also. This will be ROC(10). We will also use one other rate of change, ROC(3). We have now added 5*2 = 10 inputs to our network, for a total of 15. All of the preprocessing can be done with a spreadsheet.

| Previous | Table of Contents | Next |